5 AI Implementation Failures and the Lessons Learned from Them

Artificial Intelligence promises revolutionary advancements, but its implementation is not without pitfalls. This article delves into five significant AI failures, exploring real-world cases where technology fell short of expectations. Drawing on insights from industry experts, it uncovers valuable lessons that can guide future AI deployments and help avoid costly mistakes.

- AI Copywriting Tool Lacks Human Touch

- Chatbot Fails to Address Root Issues

- Ticket Routing AI Misses Nuanced Inquiries

- Automated Consultations Hinder Client Relationships

- Generic AI Forecasting Causes Costly Errors

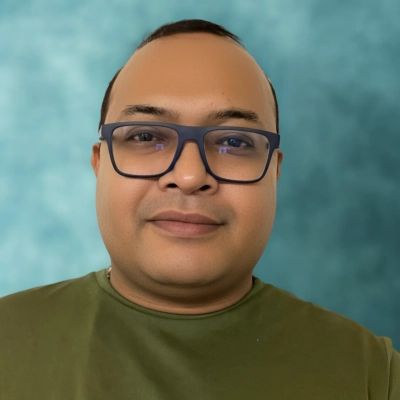

AI Copywriting Tool Lacks Human Touch

When AI started becoming mainstream in marketing, I was one of the early adopters eager to integrate it into Nerdigital's workflows. One of our first experiments was using an AI tool to automate copywriting for ad campaigns. On paper, it seemed like a dream—it promised speed, scale, and lower costs. We imagined freeing up our team's time for more strategic work while letting the AI handle the repetitive copy tasks.

The reality was very different. The tool churned out technically correct ad copy, but it lacked the nuance, cultural sensitivity, and emotional intelligence that make campaigns resonate. I remember one campaign in particular for a lifestyle client: the AI-generated copy checked every SEO and keyword box but fell flat in testing. Engagement rates dropped, and the brand voice felt generic—almost robotic. What was meant to save time actually created more work, because the team had to rewrite most of what the AI produced.

The key lesson I took away was that AI isn't a plug-and-play solution. It's a partner, not a replacement. Expecting it to replicate human creativity was the wrong approach. Where it excels is in augmenting processes—like analyzing audience behavior, predicting trends, or testing variations at scale—but the spark of storytelling still has to come from people.

That experience changed how I frame AI inside the company. I tell my team: let's use AI to handle the heavy lifting in data and patterns, so we can spend more time where machines can't compete—understanding human emotion, crafting narratives, and building relationships.

Ironically, the "failed" implementation turned out to be a success in another way. It forced me to rethink the balance between technology and human expertise. Now, instead of chasing full automation, we ask: how can AI make us more human in our work? That mindset shift has been invaluable for every AI initiative since.

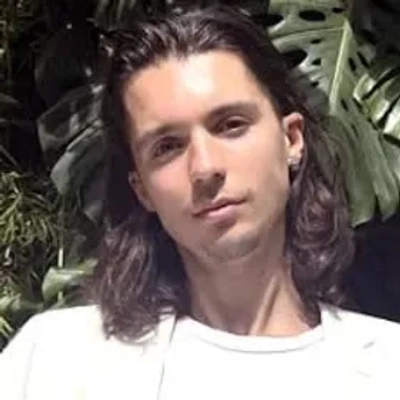

Chatbot Fails to Address Root Issues

We implemented an AI-powered customer service chatbot expecting to reduce support ticket volume by 60%, but it only achieved a 15% reduction because we focused on automating responses rather than understanding why customers were contacting us in the first place. The key lesson was that AI amplifies existing processes rather than fixing broken ones.

The implementation seemed logical: analyze common support queries, train AI to provide standard responses, and deflect routine questions before they reached human agents. The technology worked perfectly from a technical standpoint, providing accurate answers to frequently asked questions within seconds.

However, we discovered that most customer contacts weren't seeking information but expressing frustration about unclear processes, confusing interfaces, or unmet expectations. The AI could answer "How do I reset my password?" efficiently, but couldn't address the underlying UX problems that made password resets unnecessarily frequent and complicated.

Customers would interact with the chatbot, get technically correct responses, then immediately escalate to human agents because their real problems remained unsolved. The AI created an additional layer of friction rather than reducing it, actually increasing customer frustration while consuming significant implementation resources.

The critical lesson was that successful AI implementation requires process optimization before automation. Instead of using AI to handle broken customer experiences more efficiently, we should have first eliminated the root causes of common support requests through better product design and clearer communication.

The strategic insight is that AI multiplies the effectiveness of good processes but exposes the flaws in bad ones. Before implementing any AI solution, audit whether the underlying process creates value or just manages problems that shouldn't exist. AI should enhance smooth operations, not automate frustrating experiences more quickly.

This experience transformed our approach from "automate everything" to "optimize first, then automate."

Ticket Routing AI Misses Nuanced Inquiries

I once oversaw an AI implementation aimed at automating our customer support ticket routing. The system was designed to categorize and assign tickets based on keywords and urgency, but within the first month, we noticed a high rate of misrouted tickets and delayed responses. Many customer issues were being flagged incorrectly, which frustrated both our clients and support team.

The key lesson I learned was the importance of thoroughly training AI with diverse, real-world data and continuously monitoring its performance during the initial rollout. We hadn't accounted for the nuances in customer inquiries, and assuming the AI could handle edge cases without proper testing was a costly mistake.

After this experience, I implemented a phased approach for all AI projects, combining human oversight with gradual automation. It taught me that AI is powerful, but success depends on careful planning, realistic expectations, and ongoing evaluation.

Automated Consultations Hinder Client Relationships

We implemented an AI chatbot to handle initial client consultations, expecting it to qualify leads efficiently. Instead, it frustrated prospects who wanted human connection during the decision-making process. The lesson was that AI works best for information gathering and processing, not relationship building. We redesigned the system so AI handles scheduling, preliminary questions, and research compilation, while humans focus on strategy discussions and relationship development. This hybrid approach increased consultation conversion rates by 25% compared to our original all-human process.

Generic AI Forecasting Causes Costly Errors

We once attempted to utilize an AI-based forecasting tool to predict hardware failures for a client's servers. The goal was to move from reactive maintenance to predictive action. The problem was that the tool relied heavily on generic datasets and didn't account for the client's specific usage patterns. It ended up flagging too many false positives, resulting in unnecessary hardware replacements and additional costs without improving uptime.

The key lesson I took away is that AI isn't plug-and-play—you have to tailor it to your environment. I learned to always pilot these tools with a smaller dataset and validate them against real-world conditions before scaling. Now, I ensure that our AI implementations are trained on client-specific data to avoid wasted effort and deliver the real value they expect.